AI’s Role in the Evolution of DeFi Scams

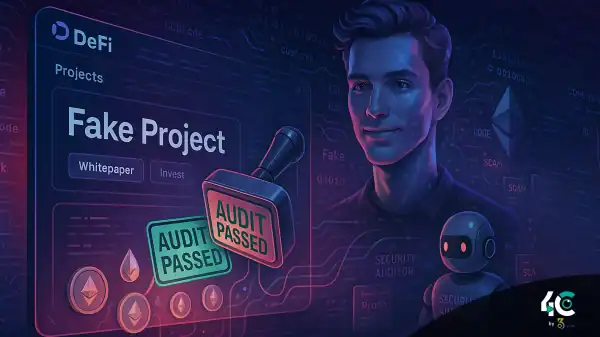

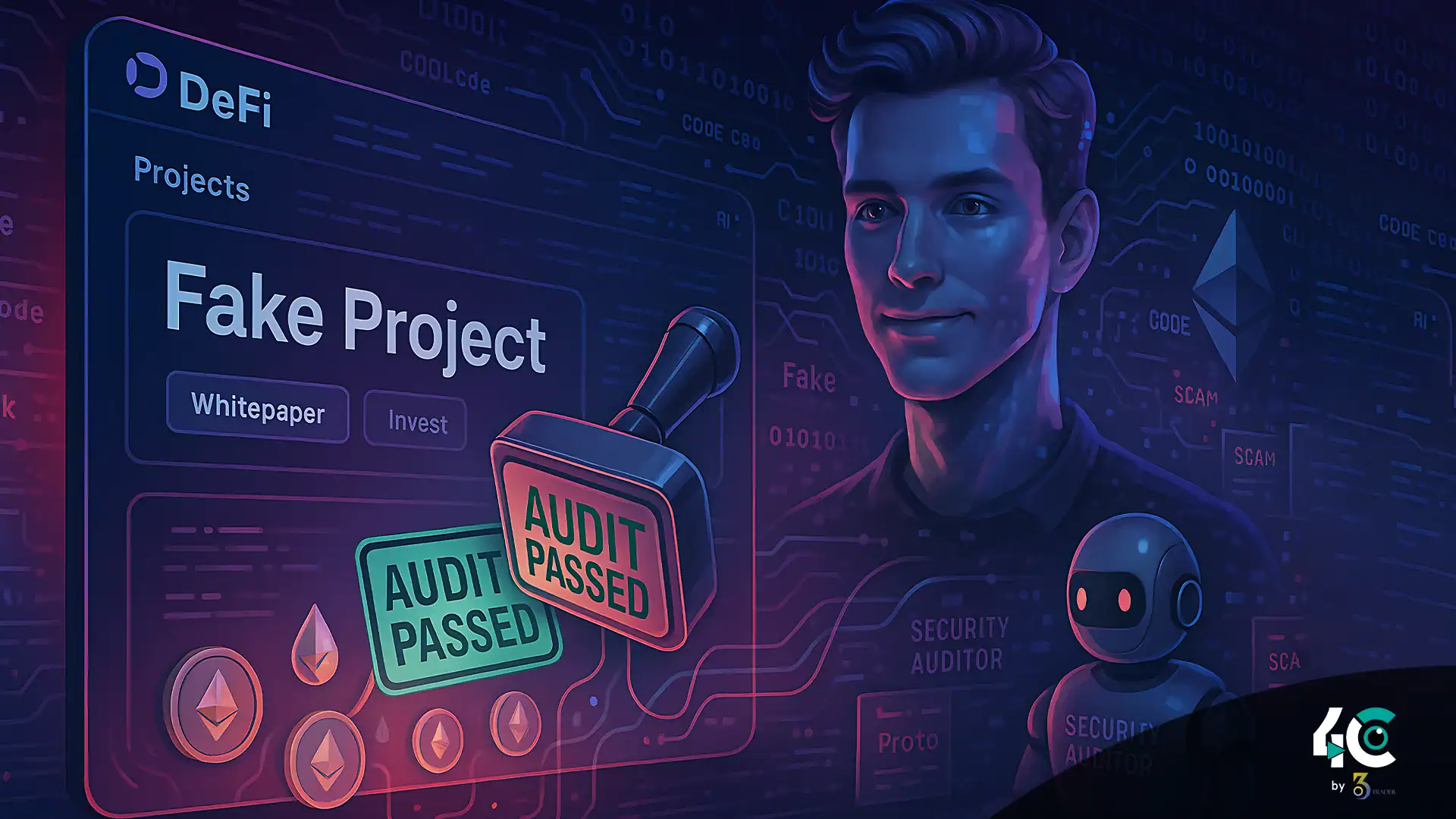

As DeFi grows, so too does their scam. Officials and auditors were blindsided by this new threat: deepfake DeFi. Deepfake DeFi projects, akin to deepfake videos, employ artificial intelligence (AI) to fabricate authentic-looking blockchain projects. They create fake teams, generate whitepapers automatically, and generate fake smart contracts too.

It’s shocking that this is passing an audit.

How Deepfake DeFi Works

Scammers are increasingly targeting companies due to the rise of fraud aided by tools. Here’s the playbook:

- Using ChatGPT or similar tools, fraudsters auto-generate technical white papers filled with jargon and diagrams.

- They utilize deepfake images or AI-generated headshots to create fake team members with convincing LinkedIn profiles and fake activity records.

- Disgruntled employees engage in corrupting coders’ self-made worms, smart contracts, and hacks. Attackers deploy their own methods to cripple enterprise software.

- Most audits done by lightweight or automated firms only check for technical issues. If the code works, it passes.

- Scammers launch platforms and promote them on X (formerly Twitter), Telegram, and Discord, farming engagement with AI-written posts. Once enough funds are deposited, they execute a rug pull and vanish.

Why This Is Working

Audits aren’t foolproof. Most audit firms focus on code vulnerabilities, not project legitimacy or intent. If a smart contract passes technical checks, it is assumed to be safe.

Meanwhile, investors often equate audits, whitepapers, and doxxed teams with safety. Deepfake DeFi exploits that trust—using AI to simulate professional authenticity.

Cases in the Wild

Several 2025 rug pulls fit this pattern, though many remain unreported. Blockchain investigators and whistleblowers have traced these scams. One notable example involved a platform with a 40-page whitepaper and “audited” token contracts, which vanished with $7 million. Days later, the supposed founders were revealed to be AI-generated personas.

How to Spot Deepfake DeFi

Investors and platforms must improve their scam detection. Watch for these red flags:

- White papers filled with vague technical talk and marketing hype

- Team bios with inconsistent or low social media engagement

- Audit reports from unknown or automated firms, lacking on-chain verification

- Front ends with no GitHub development history

- Claims of “decentralization” used to justify anonymous teams

What Needs to Change

To defend against future deepfake scams, the DeFi industry should:

- Create audit standards that evaluate project legitimacy, not just code functionality

- Promote analysis of GitHub contributions over whitepaper reviews

- Use facial recognition and social verification tools to catch deepfakes

- Empower DAOs to perform open community vetting of projects

Conclusion

The rise of Deepfake DeFi highlights a broader issue: AI is making deception easier and scalable. As Web3 evolves, trust must be rooted in verifiable transparency—not just surface signals. Audits are no longer enough. Without deeper diligence, the next big scam may be backed not by humans—but by bots.