Data Oracles and the New AI Threat

Data oracles bridge the real world and smart contracts in the decentralized finance (DeFi) space. Companies employ oracles that feed prices, weather reports, and other data to blockchains.

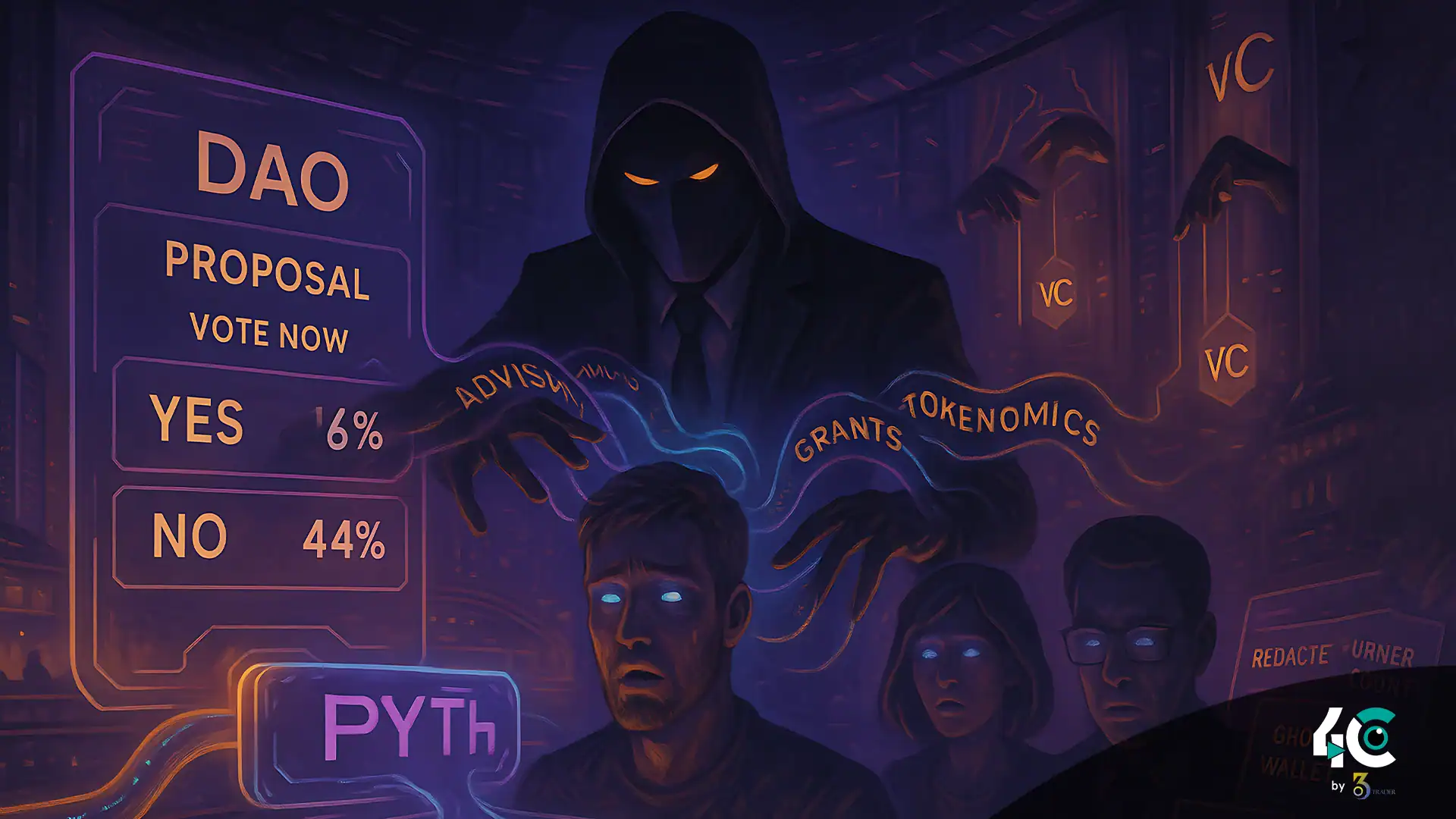

Now, an attack is emerging where artificial intelligence models like ChatGPT are used to generate fake data to manipulate oracle feeds and crypto prices.

How the AI Oracle Hack Works

At the core of DeFi are smart contracts that trigger actions—like liquidations and lending terms—based on information from oracles. When that data is incorrect, the contract can behave maliciously. Here’s how attackers manipulate that data stream:

- Attackers use language models like ChatGPT to produce phony yet convincing news bulletins, market sentiment, and pricing info.

- This AI-generated content is fed into platforms or websites that act as unofficial data sources for oracles—especially those that scrape off-chain content.

- The oracles interpret this fake data as real.

- Smart contracts are triggered based on these false inputs.

- In some cases, bots distribute this AI-generated content on Twitter, Reddit, and crypto news feeds, enhancing the illusion.

Real Examples and Early Signs

While no major DeFi platforms have been confirmed as victims, researchers have flagged numerous small protocols showing suspicious oracle behavior linked to fake news. In one case, a little-known token surged 80% after AI-generated rumors appeared on news aggregators that its oracle scraped.

Why It’s So Dangerous

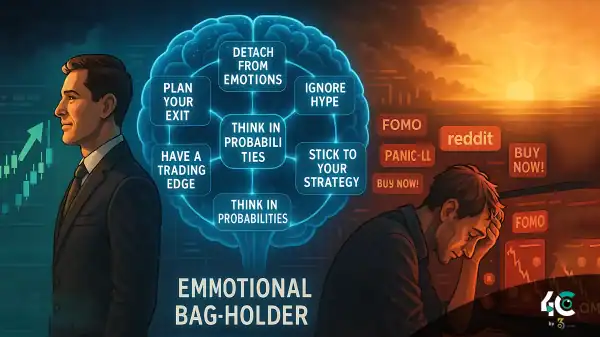

The AI Oracle Hack doesn’t break code—it attacks the input layer. Because the data looks legitimate, it can deceive both machines and humans.

The risks include:

- Flash loan exploits made easier with fake pricing.

- Cascading liquidations due to artificial price drops.

- False narratives triggering pump and dump schemes.

Oracles in the Crosshairs

DeFi’s reliance on oracles is now a point of vulnerability. Even established providers like Chainlink and Band Protocol depend on off-chain data. If those data sources become corrupted, the systemic impact could be massive.

What Can Be Done?

DeFi Protocols and Data Providers Must Act

- Use on-chain verified data only. Avoid scraped or sentiment-driven feeds.

- Implement reputation systems that penalize unreliable or manipulated sources.

- Deploy AI-detection tools to screen for fake or synthetic content.

- Consider DAO-based moderation systems to review key information manually.

A New Era of Data Security

The widespread use of AI-generated content challenges more than just DeFi. It forces us to redefine “truth” in an age when machines can fabricate reality convincingly. For decentralized finance to thrive, it must evolve smarter and more resilient oracles—and a community that understands the danger of fake data.

Conclusion

The AI Oracle Hack is a subtle yet powerful shift in crypto exploitation. It doesn’t hack code—it hacks perception. Unless DeFi evolves its trust layers to withstand synthetic data threats, the next black swan won’t come from a vulnerability in the codebase, but from a cleverly crafted bot.